Access a .pdf version of the technical program and the program-at-a-glance here.

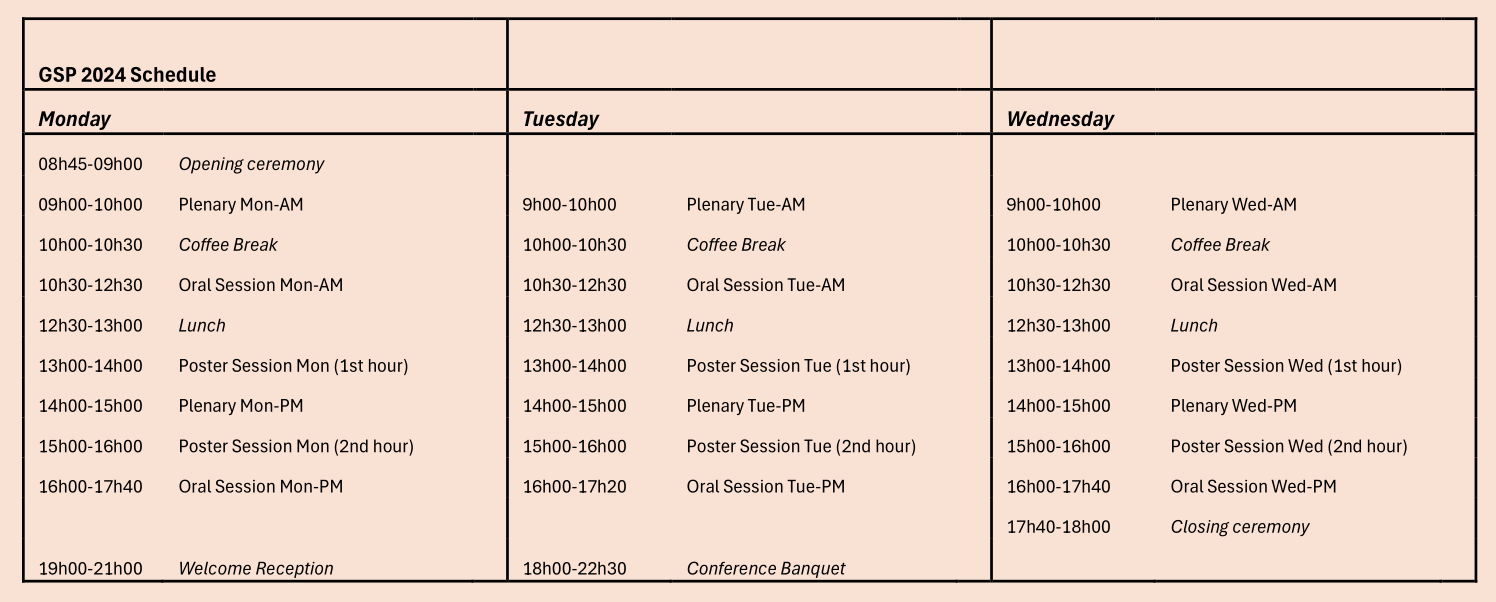

Program-At-A-Glance

General Information

Lectures in the oral sessions are 20-minutes long (including Q&As). The format of posters is flexible, but A0 size and portrait orientation are recommended.

There will be a single poster session per day. The session takes place during two different time slots (13h00 to 14h00 and 15h00 to 16h00) but the posters will be the same.

Plenary Talks

Plenary Mon-AM (Monday 9:00 - 10:00)

Georgios B. Giannakis, University of Minnesota

Title: Kernel-driven and Learnable Self-Supervision over Graphs

Abstract: Self-supervision (SeSu) has gained popularity for data-hungry training of machine learning models, especially those involving large-scale graphs, where labeled samples are scarce or even unavailable. Main learning tasks in such setups are ill posed, and SeSu renders them well posed by relying on abundant unlabeled data as input, to yield low-dimensional embeddings of a reference (auxiliary) model output. In this talk, we first outline SeSu approaches, specialized reference models, and their links with (variational) auto-encoders, regularization, semi-supervised, transfer, meta, and multi-view learning; but also their challenges and opportunities when multi-layer graph topologies and multi-view data are present, when nodal features are absent, and when the ad hoc selection of a reference model yields embeddings not optimally designed for the downstream main learning task. Next, we present our novel SeSu approach which selects the reference model to output either a prescribed kernel, or, a learnable weighted superposition of kernels from a prescribed dictionary. As a result, the learned embeddings offer a novel, reduced-dimensionality estimate of the basis kernel, and thus an efficient parametric estimate of the main learning function at hand that belongs to a reproducing kernel Hilbert space. If time allows, we will also cover online variants for dynamic settings, and regret analysis founded on the so-termed neural-tangent-kernel framework to assess how effectively the learned embeddings approximate the underlying optimal kernel(s). We will wrap up with numerical tests using synthetic and real datasets to showcase the merits of kernel-driven and learnable (KeLe) SeSu relative to alternatives. The real data will also compare KeLe-SeSu with auto-encoders and graph neural networks (GNNs), and further test KeLe-Su on reference maps with masked-inputs and predicted-outputs that are popular in large language models (LLMs).

Plenary Mon-PM (Monday 14:00 - 15:00)

Francesca Parise, Cornell University

Title: Large-Scale Network Dynamics: Achieving Tractability via Graph Limits

Abstract: Network dynamical systems provide a versatile framework for studying the interplay between the structure of complex networks and the dynamic behavior of its constituent entities, offering insights into diverse phenomena across disciplines such as physics, biology, sociology, and engineering. As the size of the underlying network increases however a number of new challenges arise. For example, collecting exact network data may become too costly and planning optimal network interventions may become computationally intractable. In this talk I will show how the theory of graph limits can be used to provide new insights on graph processes evolving over large random networks. First, I will show how graph limits can be used to define tractable infinite population models of network systems while maintaining agents heterogeneity. Second, I will show how insights derived for such infinite population models can be applied to study large but finite networks. I will illustrate the benefit of this graph limit approach for broad classes of graph processes including strategic interactions, multi-agent learning and synchronization dynamics.

Plenary Tue-AM (Tuesday 9:00 - 10:00)

Smita Krishnaswamy, Yale University

Title: Molecular discovery and analysis using geometric scattering and deep learning

Abstract: Here we present frameworks that integrate graph signal processing with deep learning in order to enhance graph representation-learning as well as predictive modeling of graph dynamics. A key innovation is our development and incorporation of learnable geometric scattering as well as its novel extensions bilipschitz scattering, directed scattering and scattering with attention, as layers into deep neural networks in order to improve expressiveness and downstream performance. We first discuss enhanced expressiveness and capability of capturing geometric properties of these methods. Then, we show applications in molecule generation, dynamics interpolation, as well as property prediction in small molecules and proteins. Finally, I will show applications where the structure captured by the graph signal processing is augmented by sequence analysis to elucidate basic RNA transcriptional biology.

Plenary Tue-PM (Tuesday 14:00 - 15:00)

Stefan Vlaski, Imperial College London

Title: Beyond Consensus-Based Methods for Decentralized Learning: Where Graph Signal Processing and Optimization Meet

Abstract: Recent years have been marked by a proliferation of dispersed data and computational capabilities. Data is generated and processed on our mobile devices, in sensors scattered throughout smart cities and smart grids , and vehicles on the road. Central aggregation of raw data is frequently neither efficient nor feasible, due to concerns around communication constraints, privacy, and robustness to link and node failure. The purpose of decentralized optimization and learning is then to devise intelligent systems by means of decentralized processing and peer-to-peer interactions, as defined by an underlying graph topology. Classically, the objective in decentralized learning has been to match the dynamics and performance of a centralized fusion center, and minimize the impact of the network on the learning dynamics. In convex, cooperative, and homogeneous environments, this paradigm is indeed optimal and can be shown to match statistical and information theoretic lower bounds. Modern learning applications, however, differ substantially from classical settings: They are highly non-convex, underdetermined, heterogeneous, and subject to non-cooperative and adversarial behavior. We will argue that effective learning in these environments requires deviation from the traditional consensus-based learning paradigm, and a refined characterization of the inductive bias and learning dynamics induced by the underlying graph topology. We will survey recent results emerging from this point of view.

Plenary Wed-AM (Wednesday 9:00 - 10:00)

Piet Van Mieghem, TU Delft

Title: Linear Processes on Networks

Abstract: From a network science point of view, we will discuss linear processes on a graph, which are the easiest, but also the most elegant processes. Real, time-dependent processes necessitate us to introduce the beautiful linear state space (LSS) model (in discrete time). We will briefly talk about two applications of LSS. Thereafter, we switch to the Laplacian matrix of a graph. From the spectral decomposition of the Laplacian, we will introduce the simplex of an undirected, possibly weighted graph. The simplex geometry of a graph is, besides the topology domain and the spectral domain, the third equivalent description of a graph. Continuous-time Markov processes are described by the Chapman-Kolmogorov (linear) equations, in which the appearing infinitesimal generator is, in fact, a weighted Laplacian of the Markov graph. We will discuss Markovian epidemics on a fixed graph, show limitations of the linear theory on graphs and introduce a mean-field approximation, a powerful method from physics, that results into non-linear governing equations.

Plenary Wed-PM (Wednesday 14:00 - 15:00)

Sergio Barbarossa, Sapienza University of Rome

Title: Topological signal processing and learning over cell complexes

Abstract: The goal of this lecture is to introduce the basic tools for processing signals defined over a topological space, focusing on simplicial and cell complexes. Nowadays, processing signals defined over graphs has become a mature technology. Graphs are just an example of topological space, incorporating only pairwise relations. In this lecture, we will motivate the need to generalize the graph-based methodologies to higher order structures, such as simplicial and cell complexes as spaces able to incorporate higher order relations in the representation space, still possessing a rich algebraic structure that facilitates the extraction of information. We will motivate the introduction of a Fourier Transform and recall the fundamentals of filtering and sampling of signals defined over such spaces. We will then show the impact of imperfect knowledge of the space on the tools used to extract information from data. Then we will present a probabilistic topological model for multivariate random variables defined over subsets of the space. Finally, we will show how to exploit the above tools in the design of topological neural networks, operating over signals defined over topological spaces of different order and we will present methods to infer the structure of the space from data.

Oral Sessions

Oral Session Mon-AM (Monday 10:30 - 12:30) - Network Topology Inference

- Paper 1: A Novel Smoothness Prior for Hypergraph Machine Learning, Bohan Tang (ID: 58)

- Paper 2: Mitigating subpopulation bias for fair network topology inference, Madeline Navarro (ID: 33)

- Paper 3: Inferring the Topology of a Networked Dynamical Systems, Augusto Santos (ID: 88)

- Paper 4: Sampling and Consensus for Anomalous Edge Detection, Panagiotis Traganitis (ID: 80)

- Paper 5: Introducing Graph Learning over Polytopic Uncertain Graph, Masako Kishida (ID: 5)

- Paper 6: Laplacian-Constrained Cram r-Rao Bound for Networks Applications, Morad Halihal (ID: 73)

Oral Session Mon-PM (Monday 16:00 - 17:40): Graph filters

- Paper 1: Median Autoregressive Graph Filters, David Tay (ID: 12)

- Paper 2: On the Stability of Graph Spectral Filters: A Probabilistic Perspective, Ning Zhang, (ID: 79)

- Paper 3: Algebraic spaces of filters for signals on graphons, Juan Andr s Bazerque (ID: 60)

- Paper 4: Graph Filtering for Clustering Attributed Graphs, Meiby Ortiz-Bouza (ID: 40)

- Paper 5: HoloNets: Spectral Convolutions do extend to Directed Graphs, Christian Koke (ID: 4)

Oral Session Tue-AM (Tuesday 10:30 - 12:30) - Signal processing on higher-order networks

- Paper 1: Disentangling the Spectral Properties of the Hodge Laplacian: Not All Small Eigenvalues Are Equal, Vicent P. Grande (ID: 32)

- Paper 2: Simplicial Vector Autoregressive Models, Rohan Money (ID: 28).

- Paper 3: Learning Graphs and Simplicial Complexes from Data, Andrei Buciulea (ID: 22)

- Paper 4: Topological Dictionary Learning, Paolo Di Lorenzo (ID: 24)

- Paper 5: Hyperedge Representations with Deep Hypergraph Wavelets: Applications to Spatial Transcriptomics, Xingzhi Sun, (ID: 81)

- Paper 6: Presentation by Mathworks

Oral Session Tue-PM (Tuesday 16:00 - 17:20) - Graph learning

- Paper 1: Graph Topology Learning with Functional Priors, Hoi-To Wai (ID: 45)

- Paper 2: Enhanced Graph-Learning Schemes Driven by Similar Distributions of Motifs, Samuel Rey (ID: 55)

- Paper 3: Heterogeneous Graph Structure Learning: A Statistical Perspective, Keyue Jiang (ID: 38)

- Paper 4: Graph Structure Learning with Interpretable Bayesian Neural Networks, Max Wasserman (ID: 21)

Oral Session Wed-AM (Wednesday 10:30 - 12:30) - Geometric deep learning

- Paper 1: Online Time Covariance Neural Networks, Andrea Caballo (ID: 50)

- Paper 2: From Latent Graph to Latent Topology Inference: Differentiable Cell Complex Module, Claudio Battiloro (ID: 23)

- Paper 3: Graph Convolutional Neural Networks in the Companion Model, Shreyas Chaudhari (ID: 67)

- Paper 4: Representing Edge Flows on Graphs via Sparse Cell Complexes, Josef Hoppe (ID: 3)

- Paper 5: Multi-Scale Hydraulic Graph Neural Networks, Roberto Bentivoglio, (ID: 59)

- Paper 6: Simplicial Scattering Networks, Sundeep Chepuri (ID: 48)

Oral Session Wed-PM (Wednesday 16:00 - 17:40) - GSP Theory and Methods

- Paper 1: Discrete Integral Operators for Graph Signal Processing, Naoki Saito (ID: 8)

- Paper 2: M bius Total Variation for Directed Acyclic Graph, Vedran Mihal (ID: 34)

- Paper 3: Involution-Based Graph-Signal Processing, Gerald Matz (ID: 49)

- Paper 4: Blind Deconvolution of Sparse Graph Signals in the Presence of Perturbations, Victor M. Tenorio (ID: 77)

- Paper 5: Quantile-based fitting for graph signals, Kyusoon Kim (ID: 64)

Poster Sessions

Poster Session Mon (Monday 13:00-14h00 & 15h00-16h00) - Euler

- Paper 1: Decentralized and Lifelong-Adaptive Multi-Agent Collaborative Learning, Shuo Tang (ID: 61)

- Paper 2: Online Graph Learning Via Proximal Newton Method from Streaming Data, Carrson Fung (ID: 70)

- Paper 3: Online Graph Filtering Over Expanding Graphs, Bishwadeep Das (ID: 53)

- Paper 4: Hodge-Aware Matched Subspace Detectors, Chengen Liu (D: 15)

- Paper 5: Windowed Hypergraph Fourier Transform and Vertex-frequency Representation, Alcebiades Dal Col (ID: 35)

- Paper 6: Graph Signal Processing: The 2D Companion Model, John Shi (ID: 66)

- Paper 7: Robust Graph Learning for Classification, Jingxin Zhang (ID: 47)

- Paper 8: Sparse Recovery of Diffused Graph Signals, Gal Morgenstern (ID: 14)

- Paper 9: Learning Stochastic Graph Neural Networks with Constrained Variance, Elvin Isufi (ID: 27)

- Paper 10: On the Impact of Sample Size in Reconstructing Signals with Graph Laplacian Regularisation, Baskaran Sripathmanathan (ID: 86)

- Paper 11: Hypergraph Transformer for Semi-Supervised Classification, Bohan Tang (ID: 68)

- Paper 12: Convolutional GNN to process signals defined over DAGs, Samuel Rey (ID: 87)

- Paper 13: Graph Neural Networks with Adaptive Structure, Ziping Zhao (ID: 26)

- Paper 14: Efficient Task Planning with Taxonomy Graph States and Large Language Models, Zhaoting Li (ID: 51)

- Paper 15: Sampling sparse graph signals, Stefan Kunis (ID: 7)

- Paper 16: Learned Finite-Time Consensus for Distributed Optimization, Aaron Fainman (ID: 78)

- Paper 17: Framelet Message Passing, Yuguang Wang (ID: 29)

Poster Session Tue (Tuesday 13:00-14h00 & 15h00-16h00) - Laplace

- Paper 1: ResolvNet: A Graph Convolutional Network with multi-scale Consistency, Christian Koke, (ID: 37)

- Paper 2: Multiscale Graph Signal Clustering, Reina Kaneko (ID: 18)

- Paper 3: On Stability of GCNN Under Graph Perturbations, Jun Zhang (ID: 72)

- Paper 4: Kernel graph filtering – a new method for dynamic sinogram denoising, Jingxin Zhang (ID: 42)

- Paper 5: Data-Aware Dynamic Network Decomposition, Bishwadeep Das (ID: 56)

- Paper 6: Hodge-Compositional Edge Gaussian Processes, Maosheng Yang (ID: 13)

- Paper 7: Estimators for Connection-Laplacian-Based Linear Algebra, Hugo Jaquard (ID: 36)

- Paper 8: Recovering Missing Node Features with Local Structure-based Embeddings, Victor M. Tenorio (ID: 19)

- Paper 9: Seeking universal approximation for continuous counterparts of GNNs on large random graphs, Matthieu Cordonnier (ID: 44)

- Paper 10: Benchmarking Graph Neural Networks with the Quadratic Assignment Problem, Adrien Lagesse (ID: 54)

- Paper 11: Inferring Time-Varying Signal over Uncertain Graphs, Mohammad Sabbaqi (ID: 84)

- Paper 12: Optimal Quasi-clique: Hardness, Equivalence with Densest-k-Subgraph, and Quasi-partitioned Community Mining, Aritra Konar (ID: 9)

- Paper 13: Learning Causal Influences from Social Interactions, Mert Kayaalp (ID: 11)

- Paper 14: Peer-to-Peer Learning + Consensus with Non-IID Data, Srinivasa Pranav (ID: 69)

- Paper 15: Community mining by modeling multilayer networks with Cartesian product graphs, Tiziana Cattai (ID: 65)

- Paper 16: A Rewiring Contrastive Patch PerformerMixer Framework for Graph Representation Learning, Zhongtian Sun (ID: 75)

- Paper 17: Blind identification of overlapping communities from nodal observations, Ruben Wijnands (ID: 57)

Poster Session 3 (Wednesday 13:00-14h00 & 15h00-16h00) - Erdos

- Paper 1: Utilizing graph Fourier transform for automatic Alzheimer s disease detection from EEG signals, Ramnivas Sharma (ID: 6)

- Paper 2: Emergence of Higher-Order Functional Brain Connectivity with Hypergraph Signal Processing, Breno C Bispo (ID: 10)

- Paper 3: Protection Against Graph-Based False Data Injection Attacks on Power Systems, Gal Morgenstern (ID: 16)

- Paper 4: Arbitrarily Sampled Signal Reconstruction Using Relative Difference Features, Chin-Yun Yu (ID: 17)

- Paper 5: Incorporating the spiral of silence into opinion dynamics, Shir Mamia (ID: 20)

- Paper 6: scPrisma infers, filters and enhances topological signals in single-cell data using spectral template matching, Jonathan Karin (ID: 25)

- Paper 7: Graph Neural Network-Based Node Deployment for Throughput Enhancement, Yifei Yang (ID: 30)

- Paper 8: GSP-Traffic Dataset: Graph Signal Processing Dataset Based on Traffic Simulation, Rui Kumagai (ID: 39)

- Paper 9: Attack Graph Model for Cyber-Physical Power Systems Using Hybrid Deep Learning, Alfan Presekal (ID: 43)

- Paper 10: Interpretable Diagnosis of Schizophrenia Using Graph-Based Brain Network Information Bottleneck, Tianzheng Hu (ID: 46)

- Paper 11: Exploiting Variational Inequalities for Generalized Change Detection on Graphs, Juan F Florez (ID: 52)

- Paper 12: Measuring Structure-Function Coupling Using Joint-modes of Multimodal Brain Networks, Sanjay Ghosh (ID: 62)

- Paper 13: Autoregressive GNN for emulating Stormwater Drainage Flows, Alexander Garz n (ID: 63)

- Paper 14: Faster Convergence with Less Communication: Broadcast-Based Subgraph Sampling for Decentralized Learning over Wireless Networks, Zheng Chen (ID: 71)

- Paper 15: Data-driven Polytopic Output Synchronization from Noisy Data, Wenjie Liu (ID: 74)

- Paper 16: A Graph Signal Processing Framework based on Graph Learning and Graph Neural Networks for Mental Workload Classification from EEG signals, Maria a Sarkis (ID: 76)

- Paper 17: State Estimation in Water Distribution Systems using Diffusion on the Edge Space, Bulat Kerimov (ID: 82)

- Paper 18: Neuro-GSTH: Quantitative analysis of spatiotemporal neural dynamics using geometric scattering and persistent homology, Dhananjay Bhaskar (ID: 85)

- Paper 19: PET Image Representation and Reconstruction based on Graph Filter, Jingxin Zhang (ID: 41)